Great Info About How To Check Robots.txt

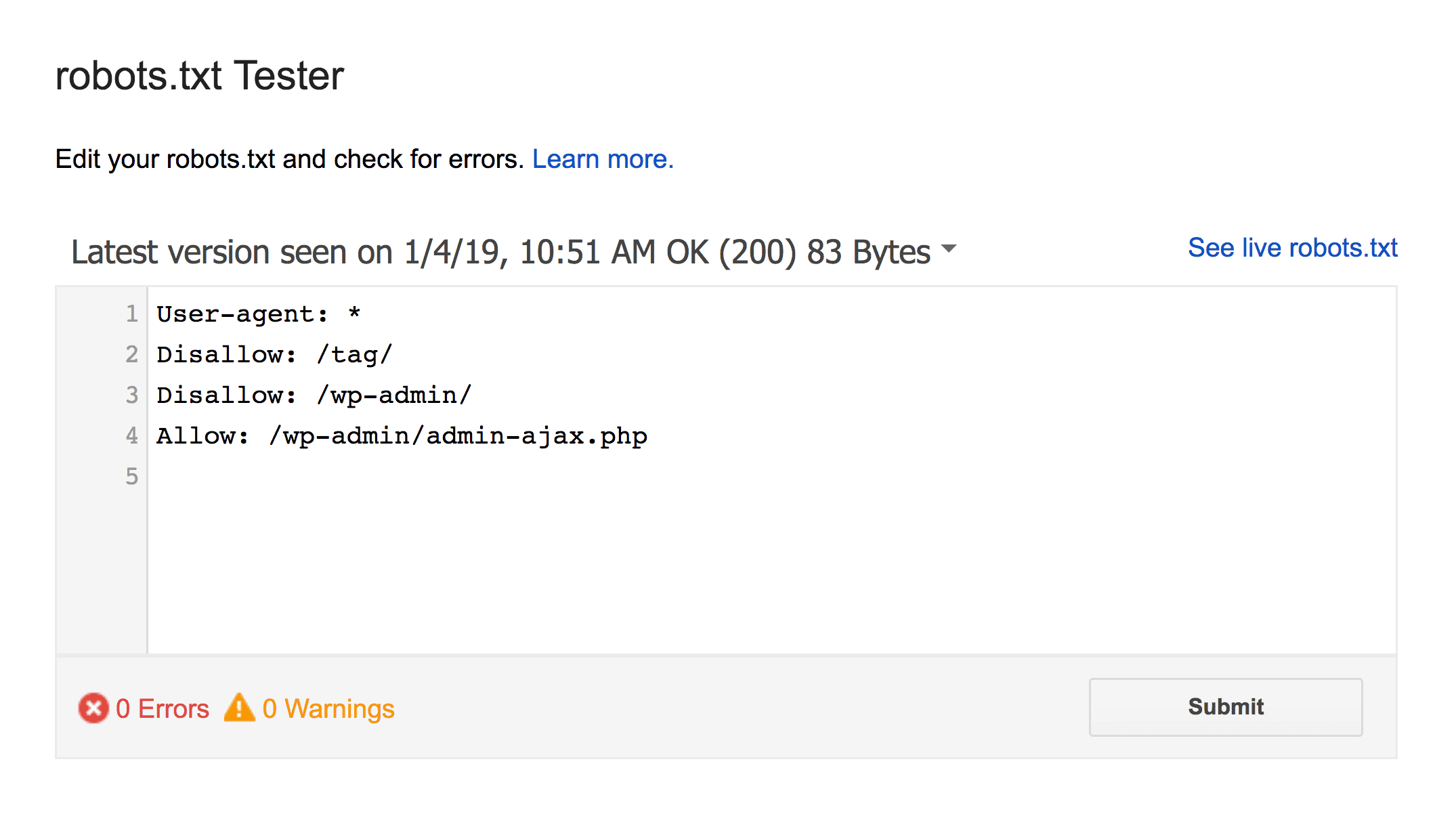

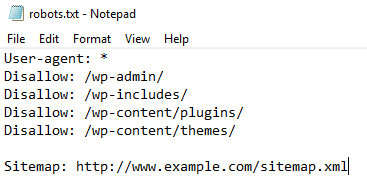

A simple robots.txt file looks like this:

How to check robots.txt. Wrapping up, robots txt file checker. Although no one can open the. Check to see if test button now read… 6.

Robots.txt is a text file that provides instructions to search engine crawlers on how to crawl your site, including types of pages to access or not access. It is often the gatekeeper of your site,. Sign in to account with the current site confirmed on its platform and.

Remember to use all lower case for the filename: Robots.txt is a file that is part of your website and which provides indexing rules for. Unless you use a plugin that dynamically generates robots.txt, you should see a robots.txt file at the root.

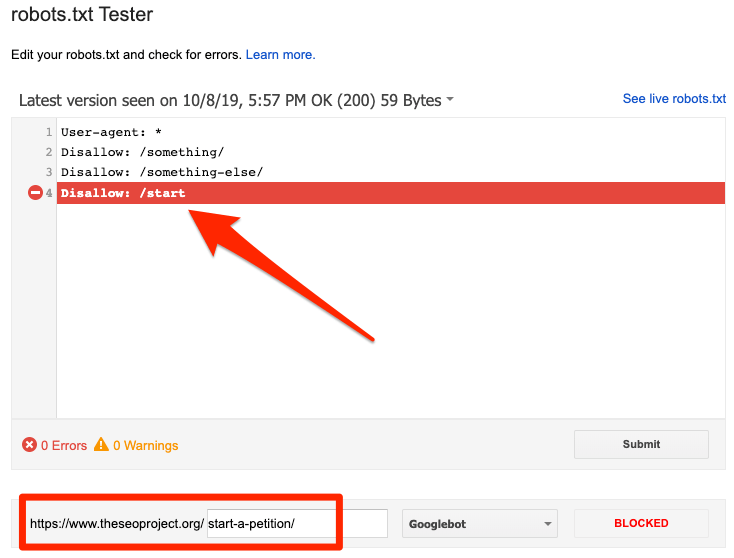

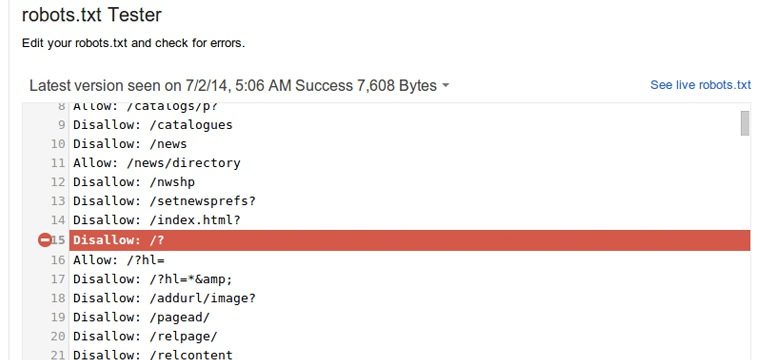

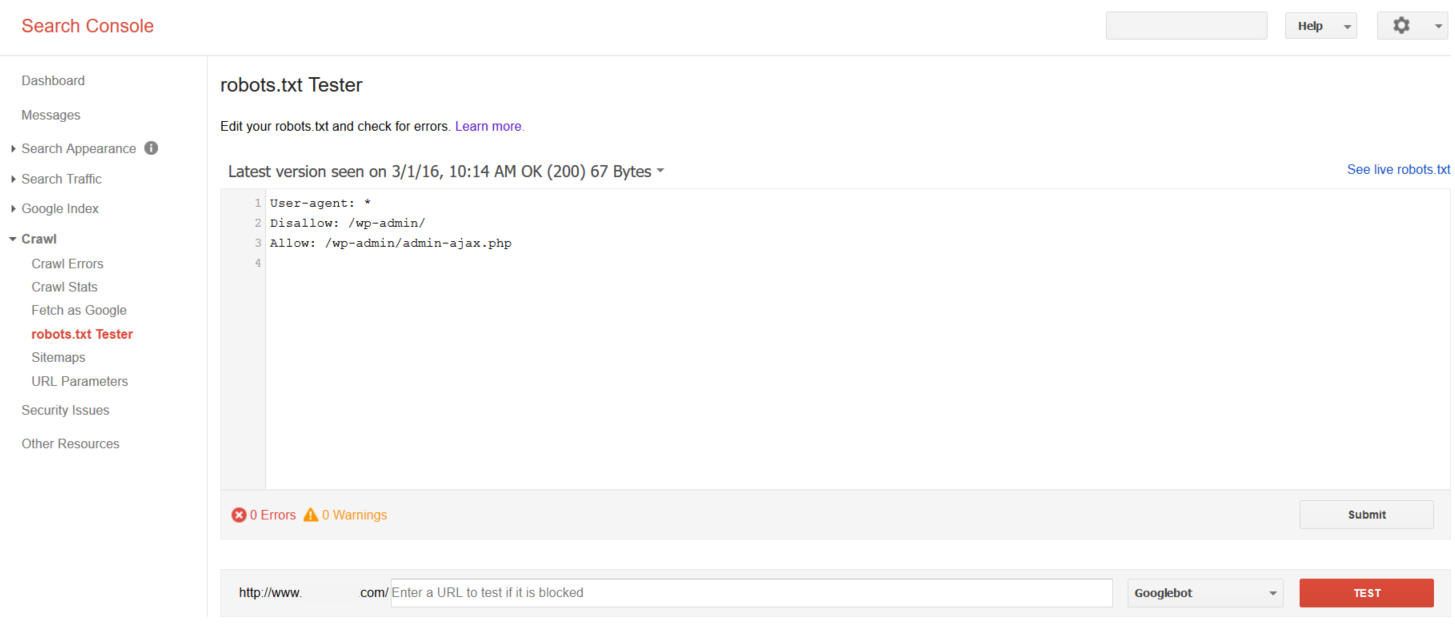

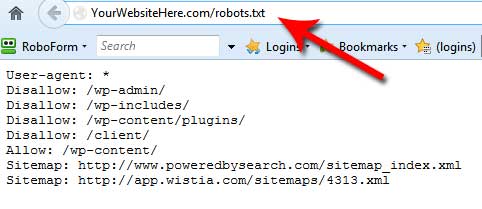

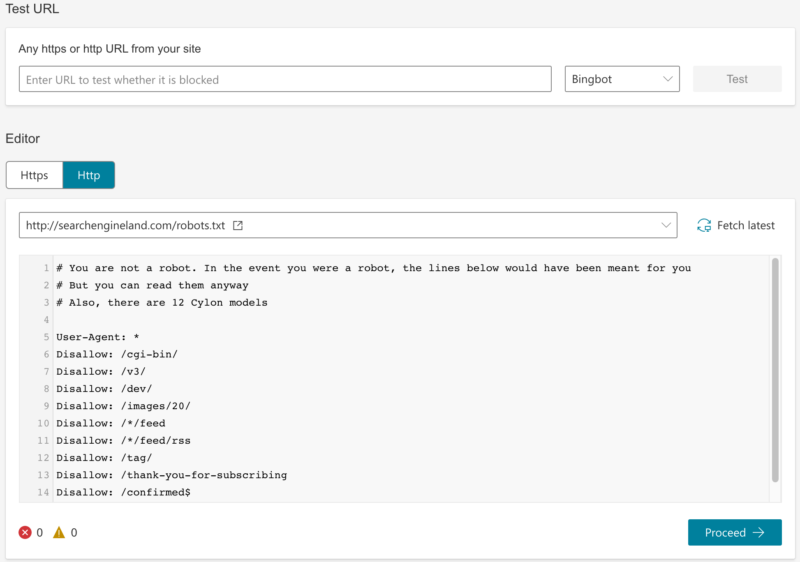

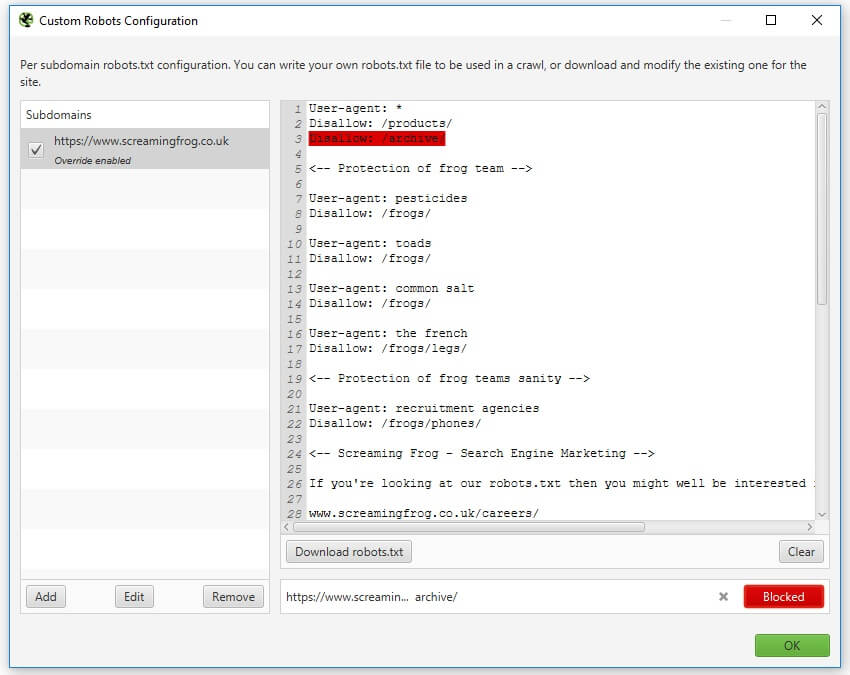

While you can view the contents of your robots.txt by navigating to the robots.txt url, the best way to test and validate it is through the robots.txt tester tool of google search. Edit the file on the page. To test and validate your robots.txt, or to check if a url is blocked, which statement is blocking it and for which user agent, one should put in the url of the website property which needs to be.

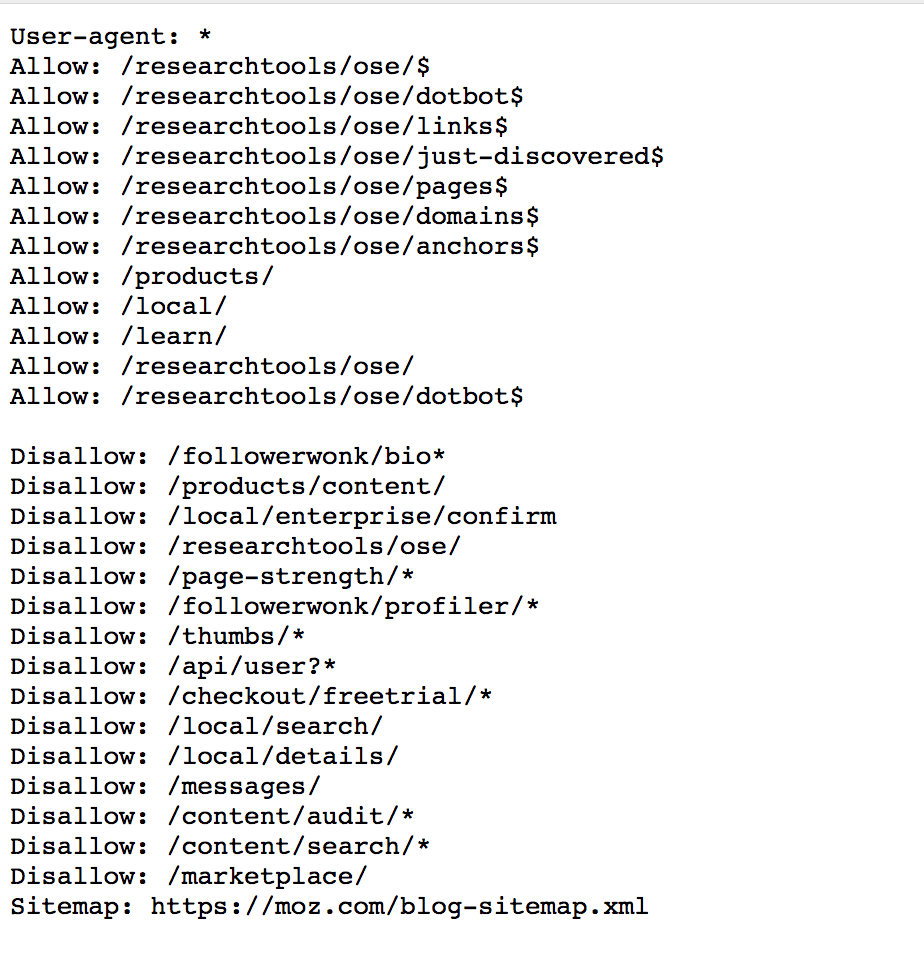

Without any context, a robots.txt checker can only check whether you have any syntax mistakes or whether you're using deprecated directives such as robots.txt noindex. Now only the old version of google search console has tool to test robots file. Generally speaking, the content of the robots.txt file should be viewed as a recommendation for search crawlers that defines the rules for website crawling.

This tool can help you check for the most common errors found in robots.txt files. You can also check if the resources for the page are disallowed. The robots.txt file is accessible by everyone on the internet.